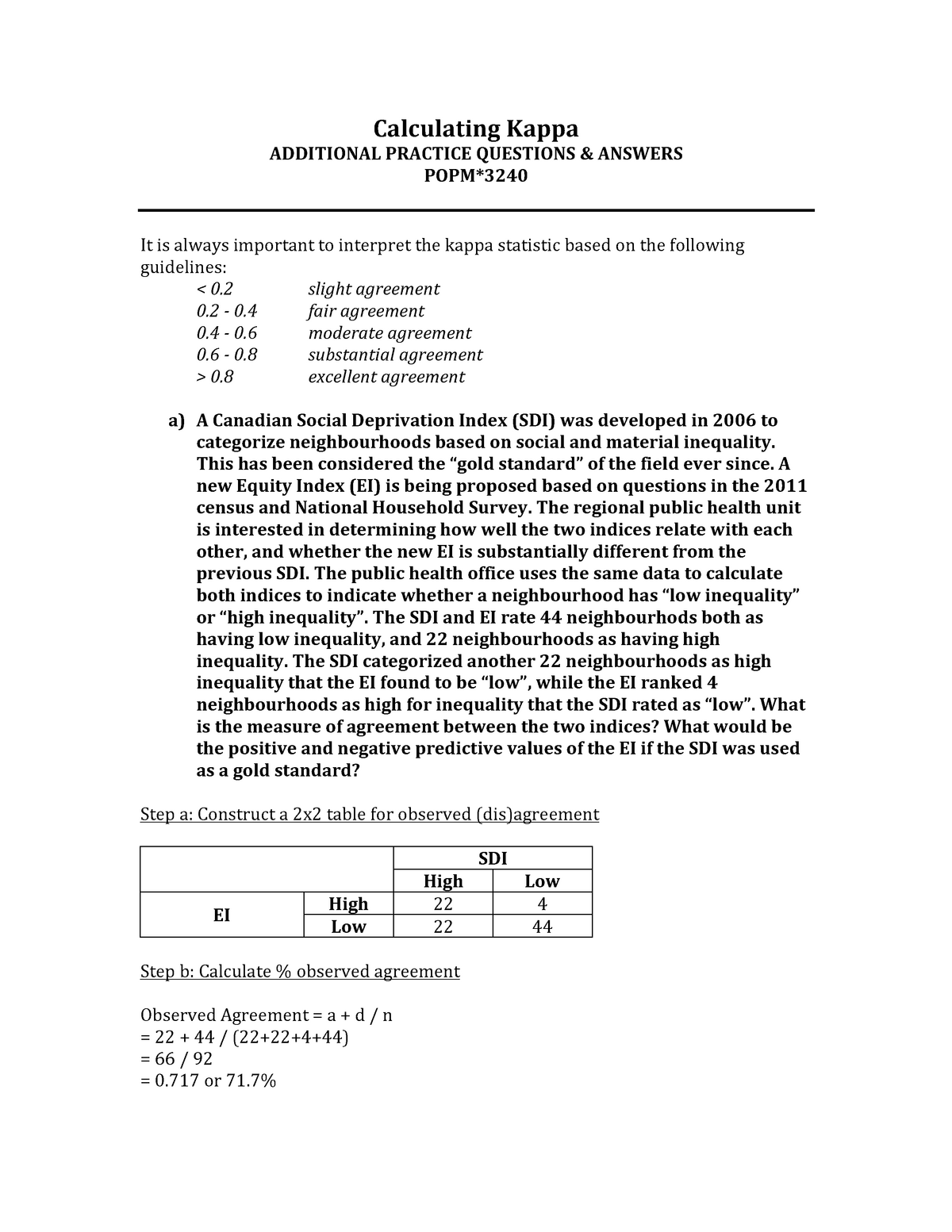

Kappa Practice Answers - Calculating Kappa ADDITIONAL PRACTICE QUESTIONS & ANSWERS - StuDocu

![PDF] A Simplified Cohen's Kappa for Use in Binary Classification Data Annotation Tasks | Semantic Scholar PDF] A Simplified Cohen's Kappa for Use in Binary Classification Data Annotation Tasks | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/0efde960b887a6daffffc34d4e729965c1c972ee/6-Table3-1.png)

PDF] A Simplified Cohen's Kappa for Use in Binary Classification Data Annotation Tasks | Semantic Scholar

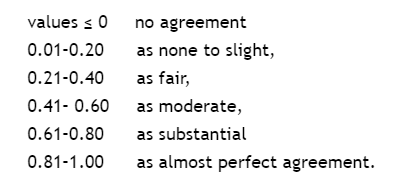

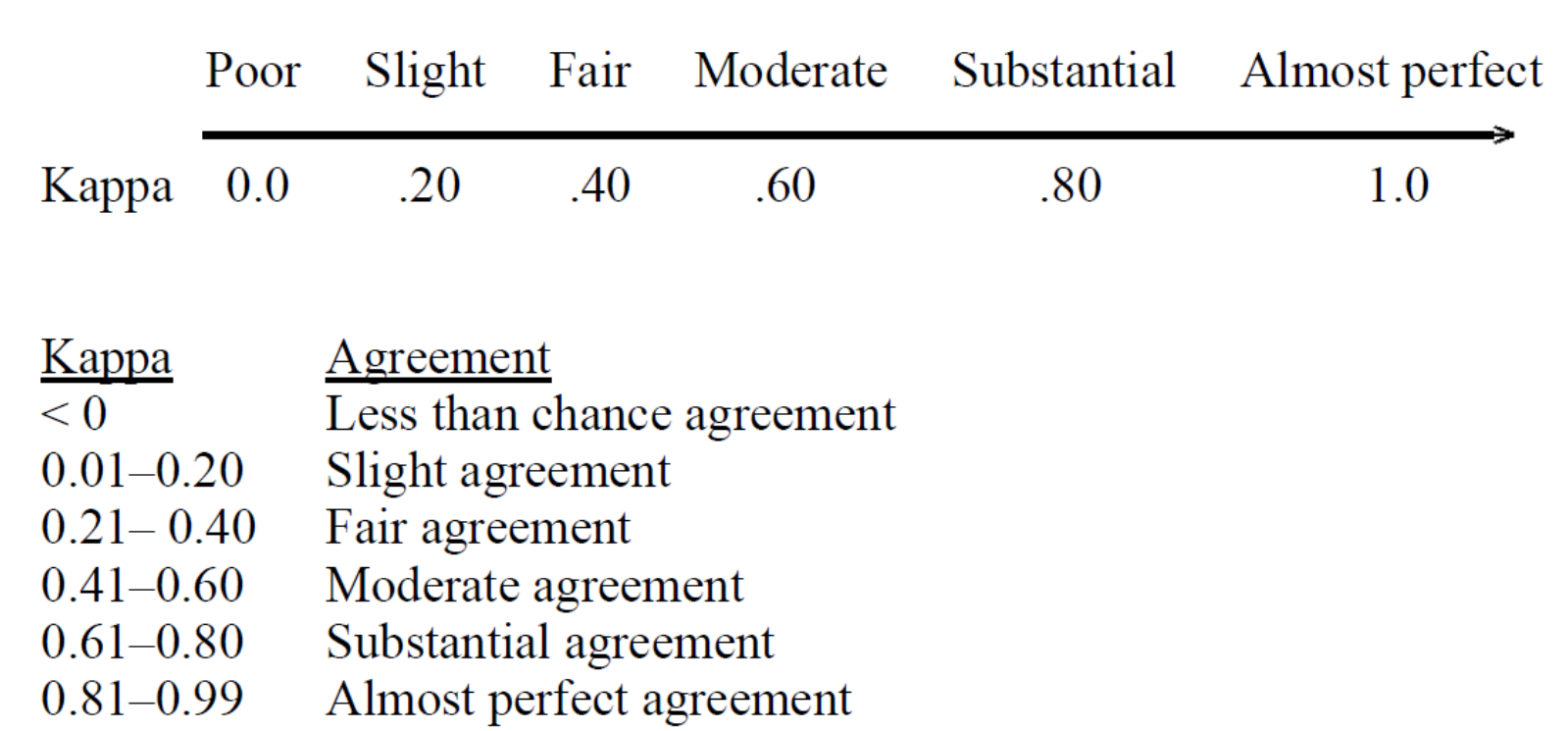

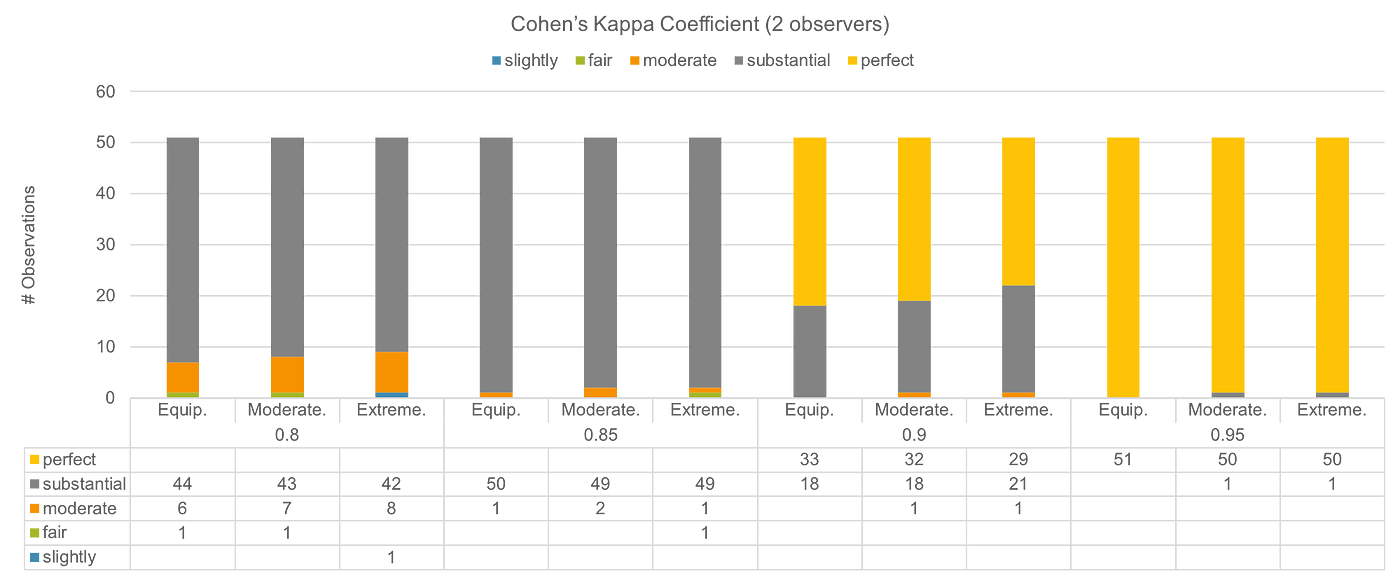

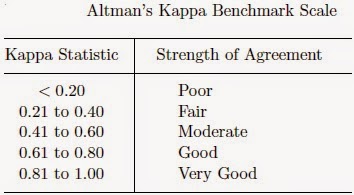

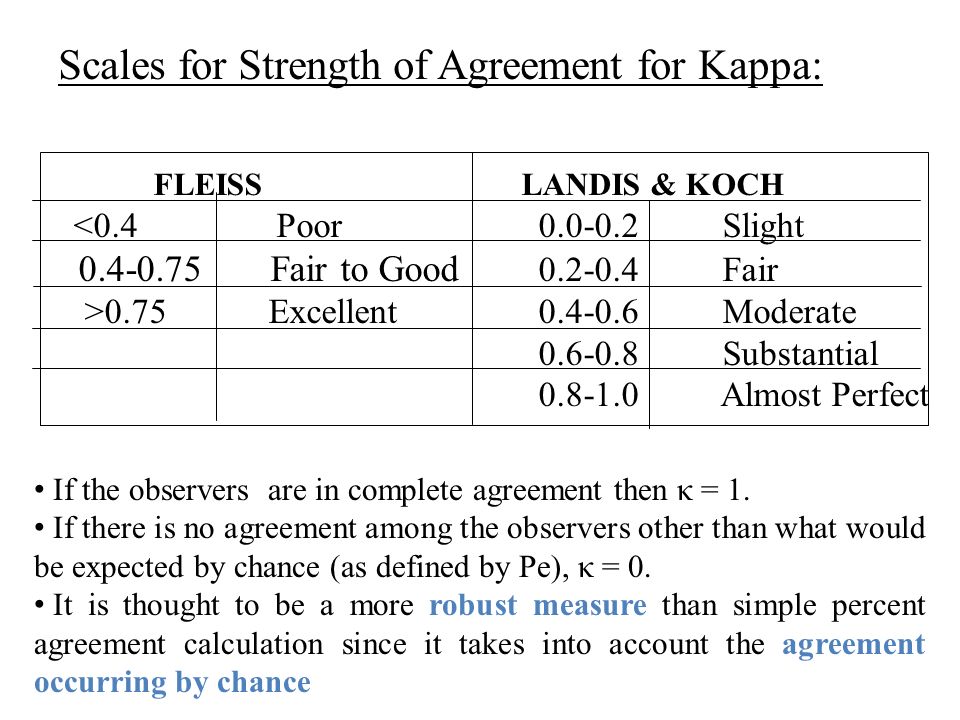

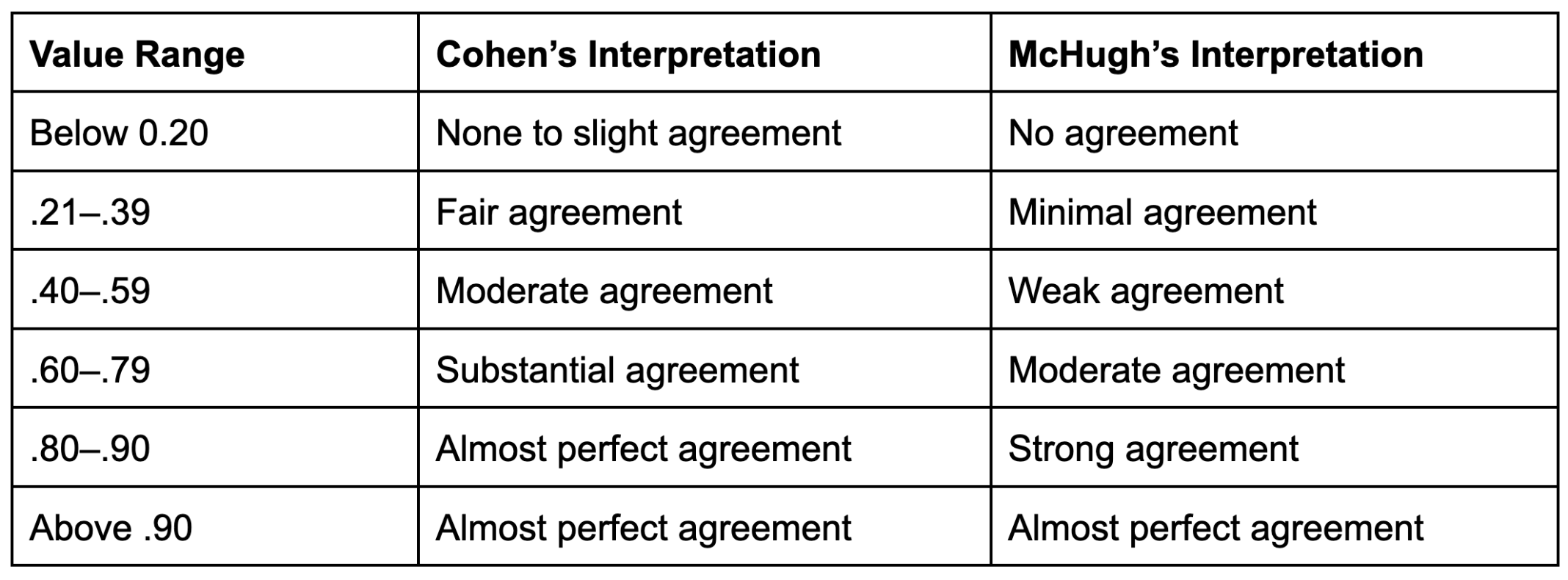

Interpretation of Kappa Values. The kappa statistic is frequently used… | by Yingting Sherry Chen | Towards Data Science

Putting the Kappa Statistic to Use - Nichols - 2010 - The Quality Assurance Journal - Wiley Online Library

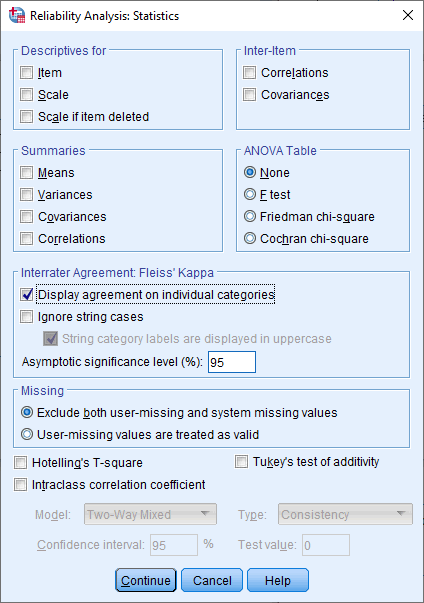

K. Gwet's Inter-Rater Reliability Blog : Benchmarking Agreement CoefficientsInter-rater reliability: Cohen kappa, Gwet AC1/AC2, Krippendorff Alpha

![PDF] Fuzzy Fleiss-kappa for Comparison of Fuzzy Classifiers | Semantic Scholar PDF] Fuzzy Fleiss-kappa for Comparison of Fuzzy Classifiers | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/8aa54d5299fb48d6a7355c766573ecb520a43393/3-Table1-1.png)